In an exciting update for developers, Google has introduced a new AI model named Gemini, which promises to be more accessible and developer-friendly. Gemini is designed to compete with models like OpenAI's GPT-4 and benefits from Google's recent initiatives, making it easier to integrate into various applications. If you're a developer exploring powerful alternatives or supplementary tools to OpenAI, here's why Gemini might be the right choice.

Gemini Joins OpenAI Library: Simplifying Access

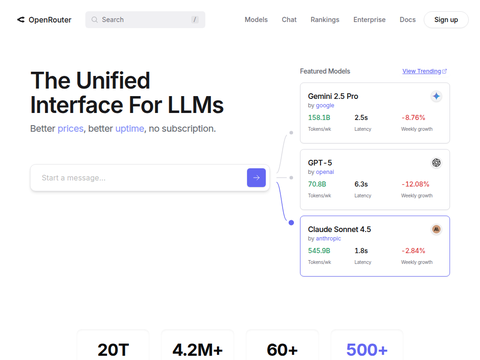

Google's Gemini is now accessible through the OpenAI library, providing a seamless experience for developers already familiar with OpenAI tools. This integration allows developers to leverage Gemini alongside other AI models directly within their existing workflows. Google has taken a significant step by incorporating Gemini into a popular ecosystem, reducing the common friction associated with adopting new AI technologies.

Incorporating Gemini into the OpenAI library means developers don't need to overhaul existing code or processes. Instead, they can experiment with Gemini's capabilities within their current tools, providing an easy pathway to enhance or complement their AI-driven applications. This flexibility is particularly appealing to developers looking to optimize or expand software functionalities with minimal disruptions.

Simplified Migration Path for Developers

Transitioning to a new AI platform can be daunting, especially when developers have invested significant time integrating existing models. Google recognizes this challenge and offers comprehensive support for developers looking to migrate to Gemini. Recently launched migration tools and detailed documentation aim to make this transition as smooth as possible. Developers familiar with the OpenAI API can effortlessly migrate their code, thanks to similar syntax and helpful example guides.

Python Code Example:

python

from openai import OpenAI

client = OpenAI(

api_key="gemini_api_key",

base_url="https://generativelanguage.googleapis.com/v1beta/"

)

response = client.chat.completions.create(

model="gemini-1.5-flash",

n=1,

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{

"role": "user",

"content": "Explain to me how AI works"

}

]

)

print(response.choices[0].message)

A key highlight of Gemini is its compatibility with existing OpenAI model interfaces. Google is also focused on delivering performance that matches or even surpasses the reliability and speed of competing models, making it a suitable alternative or complement for developers looking to expand their AI capabilities. Migration assistance tools include help with prompt adjustments, fine-tuning processes, and examples for tweaking implementation details—all designed to facilitate a smooth experience.

One standout feature of Gemini is its emphasis on enhancing contextual understanding, aimed at supporting more nuanced and complex tasks. Google intends to address some of the limitations observed in traditional AI models, such as maintaining coherence over extended interactions or comprehending domain-specific terminology. Gemini's training leverages Google's extensive data resources, ensuring excellent performance across a diverse range of use cases.