The Allen Institute for AI (Ai2) has unveiled its Molmo series, a collection of open-source language models designed to process both text and imagery. This announcement comes alongside Meta Platforms Inc.'s Connect 2024 product launch event, where Meta introduced its own open-source language model lineup, Llama 3.2. Notably, two models within Llama 3.2 also offer multimodal processing capabilities, mirroring the functionality of Molmo.

Based in Seattle, Ai2 is a nonprofit organization dedicated to advancing machine learning research. The newly released Molmo series comprises four neural networks, the most advanced of which features 72 billion parameters, while the most hardware-efficient model includes 1 billion parameters. The remaining two models each contain 7 billion parameters.

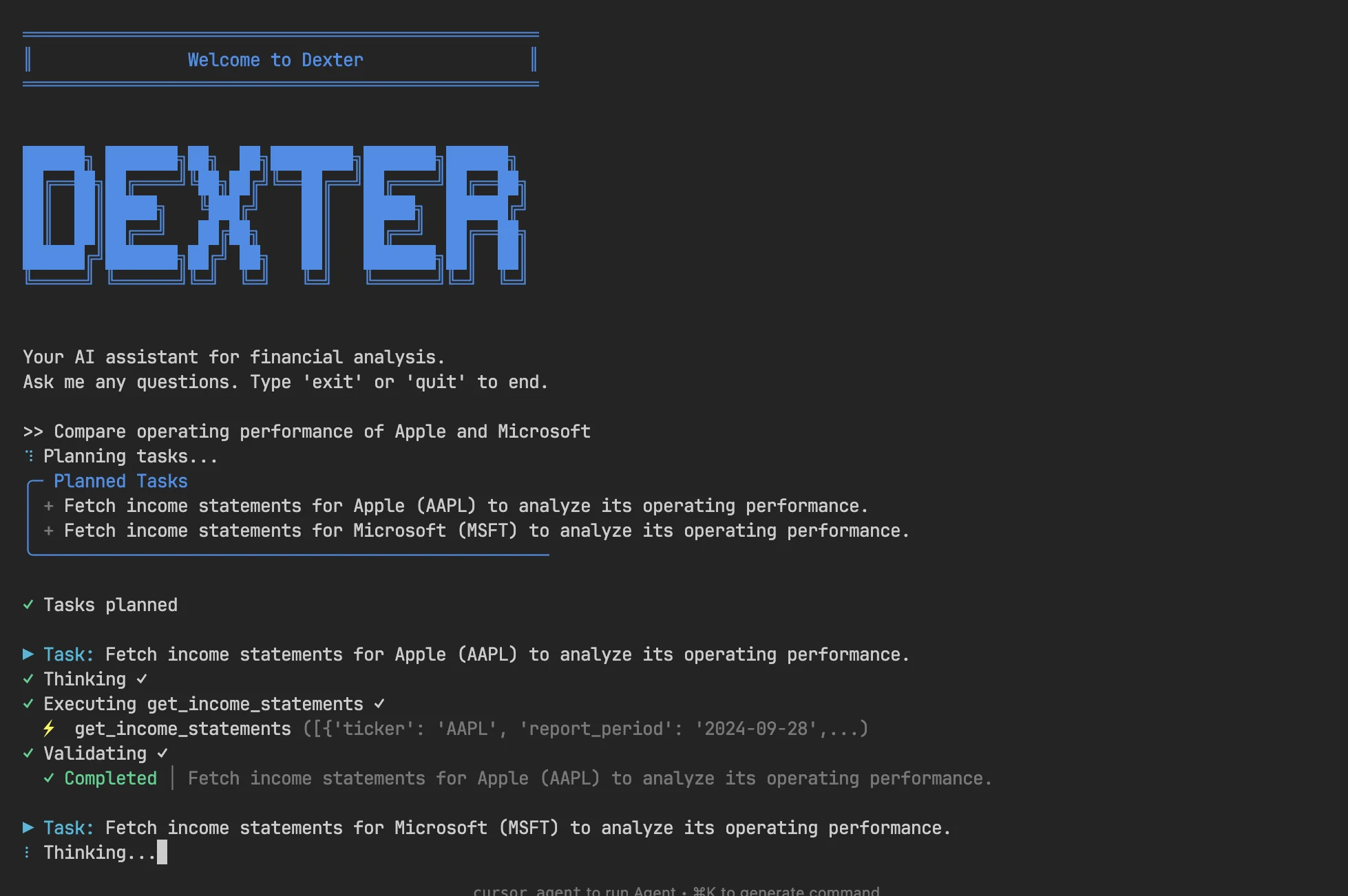

In addition to responding to natural language commands, all algorithms in the Molmo series support multimodal processing. They can identify objects within images, perform counting tasks, and provide descriptions. Moreover, these models are capable of undertaking related tasks, such as interpreting data visualizations in charts.

During internal assessments, Ai2 compared Molmo against several proprietary large language models using 11 benchmark tests. The results indicated that the Molmo variant with 72 billion parameters achieved a score of 81.2, slightly surpassing OpenAI's GPT-4o model. The two Molmo models with 7 billion parameters differed by less than five points compared to OpenAI's offerings.

The smallest model in the series, equipped with 1 billion parameters, offers limited processing power. Nevertheless, Ai2 claims its performance exceeds that of certain algorithms with ten times the number of parameters. Additionally, the compact size of this model makes it suitable for operation on mobile devices.

The robust performance of the Molmo series is partly attributed to its training dataset, which includes hundreds of thousands of images, each accompanied by detailed descriptions of depicted objects. Ai2 notes that by thoroughly analyzing these descriptions, Molmo outperforms large models trained on lower-quality data in object recognition tasks.

Meanwhile, Meta's Llama 3.2 series also features four open-source neural networks. The first two models have 9 billion and 11 billion parameters respectively and utilize a multimodal architecture capable of handling both text and images. Meta states that these models achieve image recognition accuracy comparable to a scaled-down version of GPT-4o, known as GPT4o-mini.

The remaining two models in the Llama 3.2 lineup focus on text processing tasks. The more advanced version contains 3 billion parameters, while the other is approximately one-third of its size. Meta claims that these models outperform similarly sized algorithms across various tasks.