Recently, xAI, a company backed by Elon Musk, officially launched its Application Programming Interface (API) to the public. xAI, a startup spun off from the social network X, leverages X's data to train new large language models (LLMs) such as the Grok series. To attract developers, xAI announced that by the end of this year, each developer can receive a monthly API usage credit of $25 for free.

Given that it's already November, this free credit effectively covers only two months, totaling $50.

Previously, Musk announced that the xAI API entered a public testing phase three weeks ago. However, user engagement did not meet expectations, prompting the company to introduce additional free credit incentives.

Is this credit sufficient to attract developers? From the perspective of the world's wealthiest individuals, this amount may seem insignificant and isn't calculated on a per-user or total basis. However, it could be enough to encourage some developers to try xAI's tools and platforms to build applications based on the Grok models.

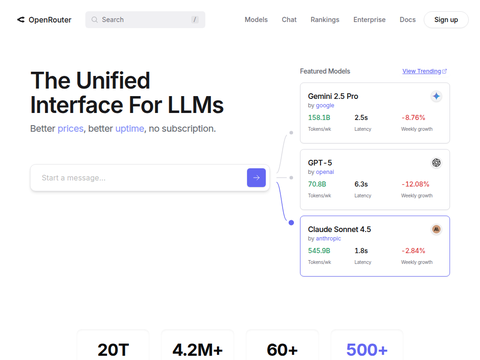

Specifically, xAI's API pricing is $5 per one million input tokens and $15 per one million output tokens. In comparison, OpenAI's GPT-4o model is priced at $2.5 per million input tokens and $10 per million output tokens, while Anthropic's Claude 3.5 Sonnet model costs $3 per million input tokens and $15 per million output tokens. Therefore, xAI's $25 credit allows developers to perform approximately two million input and one million output operations per month. For reference, one million tokens are equivalent to the text of seven to eight novels.

In terms of API context limits, xAI allows approximately 128,000 tokens per interaction for input or output, similar to OpenAI's GPT-4o. However, it is lower than Anthropic's 200,000-token window and significantly less than Google Gemini 1.5 Flash's one million context window length.

Additionally, the currently available xAI API version is limited to accessing grok-beta and text generation capabilities. It does not yet offer image generation features, such as those found in Grok 2, which are supported by Black Forest Labs' Flux.1 model.

New Grok Model Set to Launch

In a blog post, xAI revealed that the current API opening includes a preview version of the new Grok model, which is in the final stages of development. Additionally, xAI announced that a new Grok visual model will be released next week.

Furthermore, grok-beta supports "function calling" capabilities, allowing the LLM to accept user commands and access functionalities of other connected applications and services. It can even perform these functions on behalf of the user, provided the connected applications permit such access.

Compatible with Competitors

xAI posted on its X (formerly Twitter) account that the xAI API is compatible with OpenAI and Anthropic's software development kits (SDKs). This means that developers can relatively easily replace models on these platforms with grok-beta or other models available on the xAI platform.

Recently, xAI launched the "Colossus" supercluster in Memphis, Tennessee, which houses 100,000 Nvidia H100 GPUs for training new models. It is one of the largest facilities of its kind in the world and is currently operating at full capacity.